Meta has recently added a lot of new safety features to Instagram to better protect teens and child-focused accounts from harmful interactions and exploitation. The most important parts of the update are:

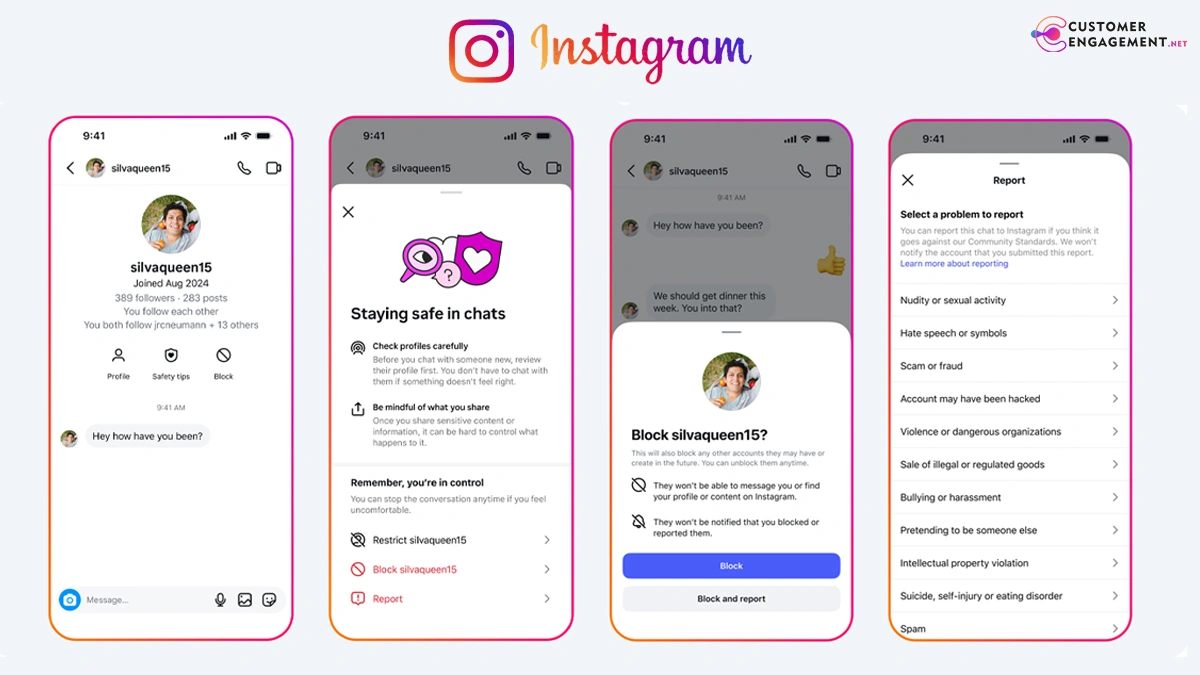

Instagram teen safety features 2025: More information in direct messages (DMs): Teen users now get tips about who they’re chatting with, like when they created their account, which helps them spot possible scams or strange behaviour. They can also quickly block and report users with just one tap, which makes the safety response easier.

The combined block-and-report feature makes it easier for users to quickly flag and block accounts that are causing problems, which makes the site safer and more responsive.

Location Notice: When teens talk to someone from another country, a warning pops up to let them know about possible dangers like sextortion scams, which have been on the rise.

Nudity protection tool: This tool automatically blurs images thatmight be nude in messages, which keeps teens from seeing explicit content and makes it less/customers less likely to send such images back.

Accounts for kids that are run by adults (like parent-managed or young talent accounts) will automatically have Instagram’s strictest messaging settings and Hidden Words will filter out rude comments. These accounts are also less visible to adults who might be suspicious, which makes it harder for predators to get to them.

Meta is testing AI technology to find users who are lying about their age. Teen accounts are created for users who are thought to be underage. These accounts have stricter privacy settings, like private defaults and messaging only to known contacts, to better protect younger users.

Meta has recently deleted over 135,000 Instagram accounts that were posting sexualised comments or asking for pictures from accounts with minors, as well as an extra 500,000 related Facebook and Instagram accounts. This is part of a larger effort to remove accounts that engage in harmful behaviour.

Safety Impact: In June 2025, teens reportedly blocked over 1 million accounts and reported another million after Instagram sent them safety messages.

These safety features show that Meta is putting more effort into protecting younger users as more people and legal issues arise about how social media affects the mental health of kids and teens. Critics say that more systemic changes are needed for full youth protection, even though the updates are a big step in the right direction.

Meta’s Instagram safety updates for 2025 will give teens more information and control over messages, make accounts for kids safer, use AI to set age-appropriate settings, and actively delete harmful accounts to make the site safer for young users.